Qualitative research offers a deep dive into human experiences, capturing thoughts, feelings, and behaviors. Unlike quantitative methods that focus on numbers and statistical significance, qualitative approaches prioritize understanding the richness and complexity of human narratives. However, this depth brings forth a challenge: ensuring that the findings are not just insightful but also trustworthy and credible.

Rigor in qualitative research doesn’t stem from replicating results in the traditional sense but from demonstrating transparency, consistency, and reflexivity throughout the research process. Lincoln and Guba (1985) introduced the concept of “trustworthiness” as a framework to assess qualitative research’s quality, emphasizing credibility, transferability, dependability, and confirmability. These criteria shift the focus from mere replication to the authenticity and applicability of the findings.

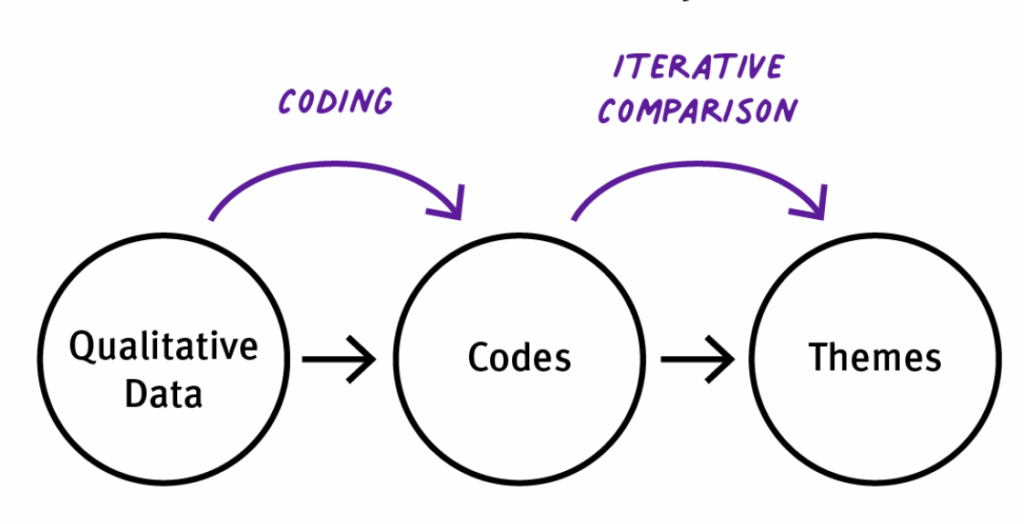

In thematic analysis, a widely used method for identifying and interpreting patterns within qualitative data, maintaining rigor is paramount. Braun and Clarke (2006) highlighted the importance of a systematic approach to coding and theme development, ensuring that the analysis remains grounded in the data while being reflective of the researcher’s interpretations. They later emphasized that rigor involves a transparent and coherent analytical process, where researchers are actively engaged and reflexive about their role in shaping the analysis (Braun & Clarke, 2019).

One practical strategy to enhance rigor in thematic analysis is ensuring intercoder reliability. This involves multiple researchers independently coding the data and then comparing their results to assess consistency. While some scholars argue that complete agreement isn’t always necessary in qualitative research, especially in reflexive thematic analysis, the process of discussing discrepancies can deepen the analysis and enhance its credibility (O’Connor & Joffe, 2020).

Moreover, documenting the coding process, decisions made, and changes implemented provides an audit trail, allowing others to understand and evaluate the research process. This transparency not only bolsters the study’s trustworthiness but also facilitates future research by providing a clear methodological roadmap (Roberts et al., 2019).

While qualitative research embraces subjectivity and depth, it equally demands a rigorous approach to ensure that its findings are both meaningful and credible. By being systematic, transparent, and reflexive, researchers can uphold the integrity of their qualitative studies.

Understanding Intercoder Reliability: Beyond Agreement

In qualitative research, especially when employing thematic analysis, ensuring consistency in how data is interpreted and coded is crucial. This consistency is where intercoder reliability (ICR) comes into play. At its core, ICR refers to the degree of agreement among different researchers (coders) when they independently code the same set of data. It’s a measure that helps establish the trustworthiness of the coding process, ensuring that the findings aren’t solely a product of one researcher’s subjective interpretation.

However, it’s essential to recognize that ICR isn’t about achieving perfect agreement. Qualitative research values the richness of diverse perspectives, and some variation in coding is expected and even beneficial. The goal is not to eliminate all differences but to ensure that the coding process is systematic and that the codes applied are consistent enough to support the study’s conclusions. O’Connor and Joffe (2020) emphasize that while ICR can enhance the transparency and credibility of qualitative analysis, it should be applied thoughtfully, considering the study’s epistemological stance.

In practice, achieving ICR involves several steps. First, developing a clear and comprehensive codebook is vital. This codebook should define each code, provide examples, and outline guidelines for application. Next, coders should undergo training sessions to familiarize themselves with the codebook and practice coding sample data. After initial coding, coders compare their results, discuss discrepancies, and refine the codebook as necessary. This iterative process not only improves consistency but also deepens the team’s understanding of the data.

It’s also worth noting that the use of statistical measures to assess ICR, such as Cohen’s Kappa or Krippendorff’s Alpha, is more common in quantitative content analysis. In qualitative research, especially when adopting a reflexive thematic analysis approach, the emphasis is more on the process of achieving consensus and understanding differences rather than solely relying on statistical indices. Braun and Clarke (2019) argue that in such contexts, collaborative discussions among coders are invaluable for enriching the analysis.

Understanding the dynamics of ICR is essential for researchers aiming to produce rigorous and credible qualitative studies. By focusing on the process of achieving consistent coding through clear guidelines, training, and collaborative discussions, researchers can ensure that their thematic analysis stands on solid ground.

Why Intercoder Reliability Matters in Thematic Analysis

In thematic analysis, the process of identifying patterns or themes within qualitative data, and ensuring consistency in how data is interpreted and coded, is paramount. This consistency is achieved through intercoder reliability (ICR), which refers to the degree of agreement among different researchers (coders) when they independently code the same set of data.

ICR is essential because it enhances the credibility and trustworthiness of the research findings. When multiple coders consistently apply the same codes to data segments, it indicates that the coding scheme is clear and that the findings are not solely a product of one researcher’s subjective interpretation. This consistency is particularly important when the research aims to inform policy decisions, develop interventions, or contribute to theoretical frameworks.

Moreover, ICR facilitates collaborative research efforts. In projects involving large datasets or multiple researchers, ICR ensures that all team members are aligned in their coding approaches, maintaining consistency throughout the analysis process. This alignment is crucial for producing coherent and unified findings, especially in interdisciplinary studies where researchers may come from diverse backgrounds.

However, it’s important to recognize that achieving ICR is not about eliminating all differences in interpretation. Qualitative research values the richness of diverse perspectives, and some variation in coding is expected and even beneficial. The goal is to ensure that the coding process is systematic and that the codes applied are consistent enough to support the study’s conclusions. O’Connor and Joffe (2020) emphasize that while ICR can enhance the transparency and credibility of qualitative analysis, it should be applied thoughtfully, considering the study’s epistemological stance.

In practice, achieving ICR involves developing a clear and comprehensive codebook, providing training sessions for coders, and engaging in iterative coding processes where discrepancies are discussed and resolved. This collaborative approach not only improves consistency but also deepens the team’s understanding of the data, leading to more nuanced and robust findings.

Understanding the significance of ICR in thematic analysis sets the stage for exploring practical strategies to enhance it. These strategies not only bolster the reliability of the findings but also contribute to a more rigorous and transparent research process.

Strategies for Enhancing Intercoder Reliability

Achieving ICR in thematic analysis is crucial for ensuring that qualitative research findings are both credible and trustworthy. While some variation in coding is expected due to the interpretive nature of qualitative research, implementing specific strategies can enhance consistency among coders.

1. Develop a Comprehensive Codebook

A well-structured codebook serves as a foundational tool for consistent coding. It should include clear definitions, inclusion and exclusion criteria, and examples for each code. This clarity helps coders apply codes uniformly across the dataset. Roberts et al. (2019) emphasize that a detailed codebook contributes to the rigor and replicability of thematic analysis.

2. Conduct Training and Calibration Sessions

Before commencing the main coding process, it’s beneficial to organize training sessions where coders can familiarize themselves with the codebook and practice coding sample data. These sessions allow for the identification and resolution of discrepancies in code application, fostering a shared understanding among coders. O’Connor and Joffe (2020) highlight the importance of such preparatory steps in enhancing ICR.

3. Engage in Collaborative Coding

Collaborative coding involves coders working together to discuss and reconcile differences in code application. This process not only improves consistency but also enriches the analysis by incorporating diverse perspectives. Ganji et al. (2018) suggest that collaborative approaches can simplify complex qualitative coding tasks and improve inter-coder agreement.

4. Utilize Qualitative Data Analysis Software

Employing software tools like NVivo or MAXQDA can aid in organizing and managing coded data efficiently. These tools often include features that facilitate the comparison of coding between different coders, making it easier to assess and enhance ICR. Such software can also assist in maintaining an audit trail of coding decisions, contributing to the transparency of the research process.

5. Implement Regular Review Meetings

Scheduling periodic meetings to review coding progress and discuss any emerging issues can help maintain consistency throughout the analysis. These meetings provide opportunities to address ambiguities in the codebook, update coding guidelines, and ensure that all coders remain aligned in their approach.

By systematically applying these strategies, researchers can enhance intercoder reliability in thematic analysis, thereby strengthening the overall quality and credibility of their qualitative research findings.

Collaborative Versus Independent Coding Approaches

In thematic analysis, the decision between collaborative and independent coding significantly influences the depth, reliability, and interpretive richness of qualitative findings. Each approach offers distinct advantages and challenges, and understanding these can guide researchers in selecting the most appropriate strategy for their study.

Collaborative Coding

Collaborative coding involves multiple researchers working together to code qualitative data, often engaging in discussions to reconcile differences and refine themes. This approach leverages the diverse backgrounds and perspectives of team members, leading to a more comprehensive understanding of the data. By integrating varied viewpoints, collaborative coding can uncover themes that might be overlooked by a single researcher, thereby enriching the analysis.

Moreover, collaborative coding fosters reflexivity among researchers. Engaging in discussions about coding decisions encourages team members to reflect on their assumptions and biases, promoting a more critical and self-aware analytical process. This reflexivity enhances the credibility of the research findings, as it demonstrates a commitment to transparency and methodological rigor (Zreik et al., 2022).

However, collaborative coding also presents challenges. Coordinating schedules, managing differing interpretations, and reaching consensus can be time-consuming and may require effective communication and conflict-resolution skills. Additionally, without clear protocols, there is a risk of inconsistencies in coding, which can compromise the reliability of the analysis (Ganji et al., 2018).

Independent Coding

Independent coding entails researchers analyzing the data separately and applying codes based on a predefined codebook. This method can enhance the consistency of coding, as each researcher applies the same criteria without influence from others. Independent coding is particularly useful in large-scale studies where efficiency and uniformity are paramount.

Furthermore, independent coding allows for the measurement of intercoder reliability, providing quantitative evidence of consistency in coding decisions. This can strengthen the validity of the research findings, especially in studies where objectivity is emphasized (O’Connor & Joffe, 2020).

Nevertheless, independent coding may limit the depth of analysis. Without collaborative discussions, researchers might miss opportunities to explore alternative interpretations or to challenge their assumptions. This could result in a more superficial understanding of the data, potentially overlooking complex or nuanced themes.

Integrating Approaches

Given the strengths and limitations of both collaborative and independent coding, researchers may consider integrating these approaches to capitalize on their respective benefits. For instance, initial independent coding can be followed by collaborative discussions to reconcile differences and refine themes. This hybrid strategy combines the consistency of independent coding with the depth and reflexivity of collaborative analysis, leading to a more robust and credible thematic interpretation.

Ultimately, the choice between collaborative and independent coding should align with the research objectives, the nature of the data, and the resources available. By thoughtfully selecting and potentially integrating these approaches, researchers can enhance the rigor and richness of their qualitative analyses.

Documentation and Transparency in Reporting Intercoder Reliability

In qualitative research, especially when employing thematic analysis, documenting and transparently reporting ICR is essential. ICR refers to the degree of agreement among different researchers (coders) when they independently code the same set of data. Transparent documentation of ICR processes enhances the credibility and trustworthiness of research findings.

Importance of Documentation in ICR

Detailed documentation of the coding process allows researchers to demonstrate the systematic approach taken during analysis. Roberts et al. (2019) emphasize that a well-structured codebook, which includes clear definitions and examples for each code, is vital for achieving consistency among coders. Such documentation not only facilitates the coding process but also enables other researchers to understand and potentially replicate the study.

Moreover, maintaining an audit trail and a comprehensive record of all decisions made during the research process further strengthens the study’s transparency. This includes documenting changes to codes, reasons for merging or splitting codes, and discussions held to resolve discrepancies among coders. An audit trail provides a clear pathway from raw data to final themes, allowing readers to trace the analytical process (Belotto, 2018).

Transparent Reporting of ICR

Transparent reporting involves clearly stating the qualitative or quantitative methods used to assess ICR, such as the statistical measures employed (e.g., Cohen’s Kappa, Krippendorff’s Alpha), the number of coders involved, and the process followed to resolve disagreements. O’Connor and Joffe (2020) argue that such transparency is crucial for readers to assess the reliability of the findings. Additionally, reporting the percentage of data double-coded and the rationale behind the chosen approach provides context and allows for a better understanding of the study’s rigor.

Furthermore, discussing the challenges encountered during coding and how they were addressed offers valuable insights into the research process. This includes reflecting on the subjective nature of qualitative analysis and the steps taken to mitigate potential biases. Such reflexivity not only enhances the study’s credibility but also contributes to the broader discourse on qualitative research methodologies (Hemmler, 2023).

Thorough documentation and transparent reporting of intercoder reliability are fundamental to the integrity of qualitative research. By meticulously recording the coding process, maintaining an audit trail, and openly discussing the methods and challenges associated with ICR, researchers can bolster the trustworthiness of their findings and contribute to the advancement of qualitative methodologies.

Balancing Intercoder Reliability with Interpretive Flexibility

In qualitative research, particularly thematic analysis, achieving a balance between ICR and interpretive flexibility is crucial. ICR ensures consistency in coding across different researchers, while interpretive flexibility allows for a deeper, nuanced understanding of the data. Striking this balance enhances the credibility and richness of qualitative findings.

Understanding Intercoder Reliability: ICR refers to the degree of agreement among coders when they independently apply codes to qualitative data. High ICR suggests that the coding process is systematic and that the codebook is well-defined. O’Connor and Joffe (2020) highlight that assessing ICR can enhance the transparency and trustworthiness of qualitative analysis. However, they also caution against overemphasizing statistical measures of agreement, as this may overshadow the interpretive nature of qualitative research.

The Role of Interpretive Flexibility: Interpretive flexibility acknowledges that researchers bring their perspectives and experiences to the analysis, influencing how they interpret data. This subjectivity is not a limitation but a strength, allowing for multiple interpretations and a richer understanding of the data. Braun and Clarke (2021) argue that in reflexive thematic analysis, the researcher’s active role in theme development is essential, and seeking consensus through ICR measures may not always be appropriate.

Strategies for Balancing ICR and Interpretive Flexibility

Achieving a balance between ICR and interpretive flexibility is crucial in thematic analysis. While ICR ensures consistency in coding across different researchers, interpretive flexibility allows for a deeper understanding of the data. Here are strategies to maintain this balance:

- Develop a Comprehensive Codebook: Creating a detailed codebook with clear definitions and examples for each code helps coders apply codes consistently. This structure provides a common framework, reducing ambiguity while allowing for interpretive insights. Roberts et al. (2019) emphasize that a well-structured codebook contributes to the rigor and replicability of thematic analysis.

- Conduct Training and Calibration Sessions: Before the main coding process, organize training sessions where coders can practice applying the codebook to sample data. These sessions help identify and resolve discrepancies in code application, fostering a shared understanding among coders. O’Connor and Joffe (2020) highlight the importance of such preparatory steps in enhancing ICR.

- Engage in Reflexive Discussions: Encouraging open discussions among coders about coding decisions and interpretations promotes reflexivity. These conversations allow team members to reflect on their assumptions and biases, leading to a more critical and self-aware analytical process. This reflexivity enhances the credibility of the research findings (Zreik et al., 2022).

- Utilize Qualitative Data Analysis Software: Employing software tools like NVivo or MAXQDA can aid in organizing and managing coded data efficiently. These tools often include features that facilitate the comparison of coding between different coders, making it easier to assess and enhance ICR. Such software can also assist in maintaining an audit trail of coding decisions, contributing to the transparency of the research process.

- Implement Regular Review Meetings: Scheduling periodic meetings to review coding progress and discuss any emerging issues can help maintain consistency throughout the analysis. These meetings provide opportunities to address ambiguities in the codebook, update coding guidelines, and ensure that all coders remain aligned in their approach.

- Embrace Interpretive Differences: Recognize that some variation in coding is expected due to the interpretive nature of qualitative research. Instead of striving for complete agreement, focus on understanding the reasons behind differing interpretations. These differences can offer valuable insights and enrich the analysis (Braun & Clarke, 2021).

- Transparent Reporting: Document the coding process, including how disagreements were handled and decisions made. Transparent reporting enhances the study’s credibility and allows others to understand and evaluate the research process (Belotto, 2018).

Balancing intercoder reliability with interpretive flexibility is essential in thematic analysis. While ICR contributes to the consistency and credibility of the analysis, interpretive flexibility enriches the understanding of complex qualitative data. By implementing clear coding frameworks, engaging in reflexive discussions, and transparently reporting the coding process, researchers can navigate the tension between consistency and interpretation, leading to robust and insightful qualitative research.

Future Directions: Technology and Evolving Practices in Thematic Analysis

Thematic analysis has long been a cornerstone of qualitative research, offering a structured yet flexible approach to identifying patterns within data. As technology advances, researchers are increasingly integrating digital tools to enhance the efficiency, accuracy, and depth of their analyses. This evolution presents both opportunities and challenges, reshaping how thematic analysis is conducted and interpreted.

Integration of Artificial Intelligence in Thematic Analysis

Artificial Intelligence (AI), particularly large language models (LLMs), is making significant inroads into qualitative research. Recent studies have explored the efficacy of AI-assisted thematic analysis, comparing it to traditional manual methods. For instance, Bennis and Mouwafaq (2025) conducted a comparative study assessing nine generative AI models’ performance in analyzing qualitative data related to cutaneous leishmaniasis. Their findings indicated that advanced AI models demonstrated impressive congruence with human-coded reference standards, suggesting that AI can effectively augment the thematic analysis process.

However, while AI offers efficiency and scalability, it also raises concerns about the depth and nuance of analysis. Qualitative research often requires an understanding of context, subtext, and cultural nuances, areas where AI may fall short. Therefore, many researchers advocate for a hybrid approach, where AI handles preliminary coding or data organization, and human researchers provide interpretive depth and contextual understanding.

Advancements in Computer-Assisted Qualitative Data Analysis Software (CAQDAS)

Beyond AI, traditional computer-assisted qualitative data analysis software (CAQDAS) continues to evolve. Tools like NVivo, MAXQDA, and ATLAS.ti have incorporated features that facilitate collaborative coding, visualization of themes, and integration with other data sources. These advancements enable researchers to manage large datasets more effectively and enhance the transparency of their analytical processes. Moreover, the development of standardized data exchange formats, such as the QDA-XML, allows for greater interoperability between different CAQDAS tools, promoting collaboration across research teams and institutions.

Embracing Online and Mobile Qualitative Research Methods

The rise of digital communication platforms has expanded the horizons of qualitative research. Online qualitative research methods, including virtual focus groups, online interviews, and digital ethnography, offer researchers access to diverse populations and real-time data collection. Mobile ethnography, in particular, empowers participants to document their experiences in real time using their smartphones. This approach provides rich, contextual data and reduces recall bias, enhancing the authenticity of the collected information.

Ethical Considerations and the Need for Reflexivity

As technology becomes more embedded in qualitative research, ethical considerations gain prominence. Issues related to data privacy, informed consent, and the potential for algorithmic bias necessitate a heightened level of reflexivity among researchers. Ensuring that technological tools are used responsibly and that participants’ rights are protected remains paramount. The integration of technology into thematic analysis heralds a new era of possibilities for qualitative research. While tools like AI and CAQDAS offer enhanced efficiency and capabilities, they should complement, not replace, the critical interpretive role of the researcher. By thoughtfully embracing these advancements and remaining vigilant about ethical considerations, researchers can enrich their analyses and contribute to the evolving landscape of qualitative inquiry.

Conclusion

Intercoder reliability (ICR) is more than just a technical checkpoint in qualitative research, it is a foundational element of integrity and trust. In thematic analysis, where researchers aim to make sense of complex human experiences and social patterns, maintaining a consistent and thoughtful approach to coding is critical. ICR helps ensure that the themes and interpretations presented are not the result of one individual’s subjective lens but are rooted in a shared, systematic understanding of the data.

That said, as we’ve explored throughout this discussion, ICR must be balanced with interpretive flexibility. Qualitative research values depth, context, and nuance. It thrives on the perspectives that each researcher brings to the table. Rather than forcing full agreement, ICR should be viewed as a guidepost that keeps teams aligned while still leaving space for thoughtful dialogue and complexity (Braun & Clarke, 2021).

If you’re currently navigating your own qualitative analysis or working on a thesis, remember that expert support can make a world of difference. Whether you’re seeking dissertation help, need a dissertation coach, or are looking for full-scale dissertation assistance, we’re here to guide you every step of the way. Our dissertation services are tailored to meet your unique research needs, from coding support to ICR strategies and beyond.

Don’t struggle in silence. Let our experienced team provide the help with the dissertation you deserve. We offer premium dissertation help services and expert dissertation consulting to ensure your research is rigorous, well-structured, and academically sound. Reach out today and take the first step toward confidence in your research. Your journey in qualitative research doesn’t have to be overwhelming, we’re here to help you make it a success.

References

Belotto, M. J. (2018). Data Analysis Methods for Qualitative Research: Managing the Challenges of Coding, Interrater Reliability, and Thematic Analysis. The Qualitative Report, 23(11), 2622–2633.

Bennis, I., & Mouwafaq, S. (2025). Advancing AI-driven thematic analysis in qualitative research: A comparative study of nine generative models on Cutaneous Leishmaniasis data. BMC Medical Informatics and Decision Making, 25, Article 124. https://doi.org/10.1186/s12911-025-02961-5

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. https://doi.org/10.1191/1478088706qp063oa

Braun, V., & Clarke, V. (2019). Reflecting on reflexive thematic analysis. Qualitative Research in Sport, Exercise and Health, 11(4), 589–597. https://doi.org/10.1080/2159676X.2019.1628806

Braun, V., & Clarke, V. (2021). One size fits all? What counts as quality practice in (reflexive) thematic analysis? Qualitative Research in Psychology, 18(3), 328–352. https://doi.org/10.1080/14780887.2020.1769238

Ganji, A., Orand, M., & McDonald, D. W. (2018). Ease on Down the Code: Complex Collaborative Qualitative Coding Simplified with ‘Code Wizard’. arXiv preprint arXiv:1812.11622.

Ganji, A., Orand, M., & McDonald, D. W. (2018). Ease on Down the Code: Complex Collaborative Qualitative Coding Simplified with ‘Code Wizard’. arXiv preprint arXiv:1812.11622. https://arxiv.org/abs/1812.11622

Hemmler, V. L. (2023). Inter-rater Consistency in Qualitative Coding. ERIC. Retrieved from https://files.eric.ed.gov/fulltext/ED643124.pdf

Lincoln, Y. S., & Guba, E. G. (1985). Naturalistic inquiry. Sage.

O’Connor, C., & Joffe, H. (2020). Intercoder reliability in qualitative research: Debates and practical guidelines. International Journal of Qualitative Methods, 19, 1–13. https://doi.org/10.1177/1609406919899220

O’Connor, C., & Joffe, H. (2020). Intercoder reliability in qualitative research: Debates and practical guidelines. International Journal of Qualitative Methods, 19, 1–13. https://doi.org/10.1177/1609406919899220

Roberts, K., Dowell, A., & Nie, J.-B. (2019). Attempting rigour and replicability in thematic analysis of qualitative research data; a case study of codebook development. BMC Medical Research Methodology, 19(1), 66. https://doi.org/10.1186/s12874-019-0707-y:content

Zreik, T., El Masri, R., Chaar, S., Ali, R., Meksassi, B., Elias, J., & Lokot, M. (2022). Collaborative Coding in Multi-National Teams: Benefits, Challenges and Experiences Promoting Equitable Research. International Journal of Qualitative Methods, 21, 1–14. https://doi.org/10.1177/16094069221139474