Machine learning is no longer just for tech companies or data scientists, it’s showing up in university hallways, research labs, and even student dissertations. As more fields embrace data-driven methods, graduate students within dissertation services are beginning to explore how machine learning (ML) can help answer research questions. But with all the hype around AI, it’s easy to feel pressure to include machine learning in your work just because it sounds impressive.

The truth is that machine learning can be incredibly powerful in a dissertation, but only if it’s used thoughtfully and for the right reasons. Predictive models, one of the most common applications of machine learning, are particularly helpful when your quantitative research involves making sense of large datasets, identifying patterns, or forecasting outcomes. They can save time, bring new insights, and help strengthen your argument. But that doesn’t mean they’re always necessary or even appropriate.

In academic settings, the goal isn’t just to build a high-performing model. You need to show that your method makes sense in the context of your research question, that you understand the theory behind it, and that your results are reliable. According to Jordan and Mitchell (2015), the key strength of machine learning lies in its ability to generalize from data, but this strength only matters when your dataset is good quality and your research question is clearly defined.

For example, if you’re working on a sociology dissertation exploring social mobility, you might use logistic regression, just like big data analytics companies do, to predict which factors most influence upward movement across generations. If you’re in environmental science, you could use a decision tree to predict where deforestation is most likely to occur based on satellite data. In both cases, the model isn’t just doing math, it’s helping tell a story backed by evidence.

That said, predictive modeling isn’t a magic bullet. It comes with limitations, such as the risk of overfitting, the need for large datasets, and the potential for bias if the data reflects social inequalities (Obermeyer et al., 2019). These are serious concerns, especially in academic work where transparency and rigor matter deeply.

This blog is meant to help you think through when it actually makes sense to bring predictive modeling into your dissertation service. We’ll look at the types of research questions that work well with ML, what kind of data you need, how to justify your methods to your committee, and how to avoid common mistakes. Whether you’re already dabbling in Python or just starting to wonder what all the fuss is about, this guide is here to help you make smart, grounded choices.

1. Understanding Predictive Modeling: A Primer for Researchers

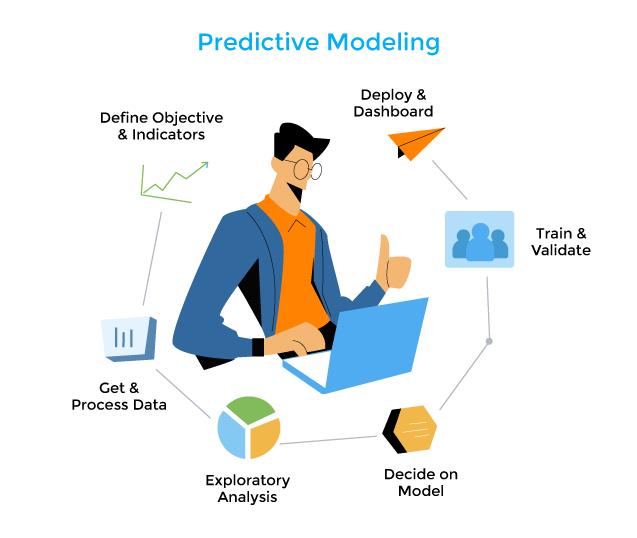

Before jumping into predictive modeling for your dissertation, it’s important to understand what it actually is and what it’s not. At its core, predictive modeling is about using existing data to make educated guesses about the future or to uncover patterns that might not be immediately obvious. It’s less about explaining why something happens and more about predicting what is likely to happen next.

In academic research, especially in social sciences and health sciences, most students are trained in traditional statistical methods like linear regression, t-tests, or ANOVA. These methods usually focus on testing hypotheses or understanding relationships between variables. Predictive modeling, on the other hand, is more about building models that perform well on new, unseen data. It prioritizes accuracy and generalization over explanation (Shmueli, 2010).

Let’s take an example. If you’re researching student success in higher education, traditional stats might help you test whether study habits are significantly associated with GPA. A predictive model, like a random forest or support vector machine, would instead try to predict which students are most likely to drop out based on a wide range of variables. You might not get a simple “cause and effect” answer, but the model could be more useful for early interventions.

Predictive models fall under the umbrella of supervised machine learning. That means the model is trained on data where the outcome is already known, like whether someone defaulted on a loan or was readmitted to the hospital, and it learns to spot the patterns that lead to that outcome. There are two main types: regression models, used for predicting numerical outcomes (like income or test scores), and classification models, used for predicting categories (like pass/fail or yes/no outcomes) (James et al., 2013).

A common misunderstanding is that predictive models are “smarter” than traditional methods. They’re not. They’re just different tools for different jobs. In fact, predictive models often require much more data to work well, and they can easily go wrong if the statistical data is messy, biased, or not representative of the population you’re studying (Bzdok et al., 2018). That’s why it’s so important to know what kind of model you’re using, what assumptions it makes, and how to evaluate whether it’s working.

Another thing to keep in mind is interpretability. Some predictive models, like decision trees or logistic regression, are relatively easy to explain. Others, like neural networks or ensemble methods, can act like black boxes. In quantitative research, especially at the dissertation level, you need to be able to explain your methods and justify your choices. A high prediction accuracy alone isn’t enough.

So, before you dive into coding or downloading packages, make sure you’re clear on what predictive modeling does and whether it’s the right approach for your research. It can be a powerful addition to your academic toolbox, but like any tool, it needs to be used with care and a solid understanding of its strengths and limits.

2. Dataset Considerations: Size, Structure, and Suitability

Once you start thinking about using predictive models in your dissertation, the next big question is whether your data is actually up to the task. Not every dataset is suited for machine learning, and forcing it can lead to weak models and misleading results. So, before diving into any modeling, it’s worth stepping back to ask: Do I have the right data for this?

Size Really Does Matter

First off, machine learning thrives on quantitative data– lots of it. Predictive models need a large number of observations to spot patterns and generalize well. Traditional statistics can sometimes work with smaller datasets, especially when testing clear, well-defined hypotheses. But machine learning models typically need more data because they learn from examples, not rules. According to Kuhn and Johnson (2013), the performance of predictive models often improves with more training data. This is especially true for complex models like neural networks or ensemble methods. If your dataset only has 100 rows, you might struggle to build a model that doesn’t overfit or mislead. A good rule of thumb is to have several hundred, if not thousands, of examples, especially if you have many variables.

It’s Not Just Size—It’s Structure

Besides the amount of data, the structure of quantitative methods matters as well. Predictive modeling usually expects “tidy” data, meaning each row is an observation, each column is a variable, and there are no major gaps or duplicates. This kind of structure allows algorithms to process the data efficiently and without confusion. If your data is nested (e.g., students within classrooms), time-series (e.g., hourly temperature readings), or text-based (e.g., interview transcripts), you’ll need to either restructure it or use models that are designed to handle those formats. For example, time-series models like ARIMA or LSTM are built for sequential data, while random forests work better with flat, tabular data (Hyndman & Athanasopoulos, 2018).

Quality Over Quantity

Even if you have a lot of data, it’s not helpful if the quality is poor. Messy quantitative data, like missing values, outliers, or inconsistent labeling, can throw off a model or introduce bias. Before modeling, you’ll need to clean your data, fill in missing values (if appropriate), and possibly normalize or encode your variables. In fact, preprocessing often takes up more time than building the model itself (Rahm & Do, 2000). You should also be cautious about class imbalance. Say you’re trying to predict student dropouts, but only 5% of students in your dataset actually dropped out. A naive model might just predict “no dropout” for everyone and still score 95% accuracy, but that’s not helpful. In such cases, techniques like oversampling, undersampling, or using evaluation metrics like the F1 score can help you avoid false confidence in your model’s performance.

Are Your Variables Meaningful?

Finally, consider the features (i.e., variables) in your dataset. Machine learning models can only learn from what you give them. If the features don’t have any meaningful relationship to the outcome you’re trying to predict, the model won’t be able to perform well. Feature engineering, the process of selecting and transforming variables to improve model performance, is both an art and a science. You’ll also need to think about data leakage. This happens when your dataset contains information that wouldn’t be available at the time you’d make a prediction in the real world. Leakage can make your model look great during training but completely useless in practice (Kaufman et al., 2012).

In short, good predictive modeling starts with the right data. You don’t need a perfect dataset, but it should be large enough, well-structured, clean, and thoughtfully selected. A strong foundation here will save you from a lot of frustration later on, and it’ll help you build a model that actually tells you something useful.

3. The Value Proposition: What Predictive Models Can Offer Your Dissertation

Let’s be honest, for students seeking dissertation help, adding predictive modeling to a dissertation isn’t just about using a trendy technique, it has to add value. In academic work, every method needs a reason to be there. So, before you commit, it’s worth asking: What does a predictive model actually bring to the table that more traditional methods might not?

Going Beyond Description: Uncovering Patterns

Predictive models are great at picking up on patterns that may not be obvious at first glance. When your quantitative research involves working with large datasets, they can help you explore relationships between variables in a way that manual inspection or simple correlations might miss. For instance, in public health research, predictive models have been used to identify non-obvious combinations of risk factors for chronic disease (Oreskovic et al., 2019). This is especially useful in dissertations where you’re trying to explore multi-factor influences, like predicting academic performance based on dozens of social, psychological, and environmental variables. A predictive model can help you sift through that complexity and zero in on the factors that matter most.

Forecasting and Scenario Building

One of the most powerful contributions of predictive models is their ability to forecast outcomes. Let’s say you’re studying voting behavior, and you want to estimate how certain demographic shifts might affect future election results. With the right data, a predictive model can simulate how things might play out under different scenarios. This kind of analysis gives your dissertation work an applied edge. It doesn’t just explain what’s happening now, it offers a glimpse into what might happen next. That kind of insight can be really compelling, especially in fields like policy, economics, and environmental science (Mellon & Prosser, 2017).

Testing Real-World Utility

Predictive models are also practical. In many disciplines, especially applied ones, it’s not enough to know that a relationship exists; you want to know if your model can actually help in a real-world setting. For example, if you’re researching student retention, a model that accurately predicts which students are likely to drop out can be used by universities to intervene early and offer support (Dekker et al., 2009). This gives your research an actionable component. You’re not just advancing theory; you’re creating tools that others can use.

Complementing Traditional Research

Here’s the thing: Predictive modeling doesn’t have to replace traditional statistical approaches. In fact, some of the best dissertations use both. You might start with theory-driven hypotheses, test them using standard statistical methods, and then build a predictive model to explore the data further or confirm your findings. This kind of mixed approach can strengthen your dissertation. It shows that you’re not relying on one method alone and that you understand both the theory and the data (Shmueli, 2010).

Giving Your Work a Competitive Edge

Let’s be real: Using predictive modeling in a thoughtful way can make your dissertation stand out. It shows you’re comfortable with data, that you’re not afraid of advanced methods, and that you’re thinking about how your work connects to the real world. In a crowded field, especially if you’re considering post-academic jobs or industry roles, that kind of experience can be a big plus. Of course, this doesn’t mean you should use ML just to impress. But when used appropriately, predictive models can add serious weight to your findings and help your work make a bigger impact.

4. Toolkits and Platforms: What Should You Use?

So, you’ve decided that predictive modeling makes sense for your dissertation. Now comes the practical question: What tools should you use to build and test your models? With so many platforms and programming languages out there, it can feel overwhelming to choose the right one. The good news? You don’t need to be a tech wizard to get started, you just need the right tool for your skill level, data needs, and research goals.

Start with What You Know (Or Can Learn Quickly)

If you’re already comfortable with a tool like SPSS, R, or Excel, you might be surprised to learn that some of them already have basic machine learning features. For example, the SPSS Modeler includes options for decision trees and regression-based predictive modeling. It’s a drag-and-drop interface, so it’s a good choice for researchers who prefer not to code (Nassif et al., 2019). But if you’re willing to get a little more hands-on, platforms like Python and R give you far more flexibility and power. These are the two most commonly used programming languages for predictive modeling in both academic and industry settings.

Python: Friendly, Flexible, and Widely Used

Python is a favorite among data scientists, and for good reason. It has a clear, readable syntax that makes it easy for beginners to pick up. More importantly, it has a rich ecosystem of libraries specifically built for machine learning, including:

- Scikit-learn – Great for beginners. It includes easy-to-use functions for classification, regression, and clustering.

- XGBoost – A high-performance library for gradient boosting, often used in competitions and real-world applications.

- Pandas & NumPy – Essential for data wrangling and numerical analysis.

One of the best things about Python is the amount of community support. If you run into a problem, chances are someone else has too—and they’ve posted about it online.

R: Built for Statisticians, Loved by Researchers

R is another strong choice, especially if your academic background is in statistics or social science. It was designed specifically for data analysis, so many of its features feel more “researcher-friendly.” You’ll find lots of built-in functions for statistical tests, visualizations, and even publication-quality graphics. Popular ML packages in R include:

- Caret – A unified interface for dozens of machine learning algorithms.

- RandomForest – For decision tree-based models.

- Tidymodels – A modern, tidyverse-friendly suite for building and evaluating ML workflows (Kuhn & Wickham, 2020).

If your dissertation committee leans toward traditional methods, R might also be an easier sell, it blends classic stats with modern modeling in a way that feels more academic.

Cloud and No-Code Platforms: Fast but Limited

If you’re really pressed for time or coding just isn’t your thing, there are cloud-based platforms that let you build models without writing much (or any) code. Tools like Google Cloud AutoML, IBM Watson, or RapidMiner offer visual interfaces and automated workflows for building predictive models. These tools are fast and user-friendly, but they come with tradeoffs. You get less control over how your model works, and you may not fully understand what the algorithm is doing behind the scenes. For academic work, especially a dissertation, that could be a problem. You’ll still need to explain your methods clearly, justify your model choices, and report results transparently.

What About Integration?

Whatever platform you choose, make sure you can integrate your results into your dissertation writing. That means exporting figures, tables, and model summaries in a way that’s clean and reproducible. Python and R both support markdown and LaTeX, which can help automate parts of your write-up and keep everything consistent. Also, think about version control (using Git, for example) and organizing your scripts in a clear, readable way. This will help you stay sane during revisions, and it’ll impress anyone reviewing your work.

Bottom Line: Choose Tools That Match Your Research and Comfort Level

There’s no “best” tool, just the one that fits your needs. If you want full control and flexibility, Python or R are great choices. If you want simplicity and speed, SPSS Modeler or a cloud platform might be enough. Just remember: The tool should support your research goals, not distract from them. Focus on clarity, transparency, and usability. Those things matter more than fancy tech.

5. Validation, Evaluation, and Reporting: Academic-Grade Standards

Using a predictive model in your dissertation isn’t just about building something that works; it’s about proving that it works, showing how well it works, and explaining it clearly enough that someone else could reproduce your results. This is where validation, evaluation, and reporting come in. These steps are what turn a machine learning model from a side project into academically credible research.

Why Model Validation Matters

Validation is how you check whether your model is truly learning something useful or just memorizing your dataset. In machine learning, this is known as avoiding overfitting, when a model performs really well on the training data but falls apart when tested on new, unseen data. Overfitting is one of the most common pitfalls in predictive modeling and a big red flag in academic work (Hawkins, 2004). To prevent this, most researchers split their data into at least two parts: a training set (to teach the model) and a test set (to evaluate it). A better approach, especially for smaller datasets, is cross-validation, where the statistical data is repeatedly split into different training and test sets to get a more reliable estimate of performance. In academia, where rigor and transparency are critical, skipping this step is not an option. If your model wasn’t validated properly, your results may not hold up under scrutiny.

Key Evaluation Metrics (And Why Accuracy Isn’t Enough)

When it comes to evaluation, accuracy sounds like the most obvious metric (i.e., how often the model makes the correct prediction. But accuracy alone can be misleading, especially if your dataset is unbalanced. For instance, if only 5% of your cases are positive (say, predicting disease outbreaks), a model that just predicts “no outbreak” for everything would be 95% accurate while being completely useless. That’s why other metrics matter, like:

- Precision – Out of all positive predictions, how many were actually right?

- Recall – Out of all real positives, how many did the model catch?

- F1 Score – A balance between precision and recall.

- ROC AUC (Area Under the Curve) – How well the model separates the classes, useful for binary classification problems.

- Root Mean Square Error (RMSE) – For regression problems, it tells you how far off predictions are on average.

The right metric depends on your research question. If you’re building a model to help prioritize medical screenings, recall might be more important (you don’t want to miss anyone at risk). If you’re automating spam filters, precision might matter more (you don’t want to block valid emails). Academic evaluators will want to know that you’ve chosen your metrics thoughtfully and that you understand what they mean in context (Saito & Rehmsmeier, 2015).

Transparent Reporting Is Non-Negotiable

One thing that separates academic work from casual modeling is the level of detail in how you report your methods and results. It’s not enough to say, “I built a model, and it worked.” You need to explain:

- What kind of model did you use and why?

- How you prepared and cleaned your data.

- How you split and validate your data.

- What metrics did you use, and why do they matter?

- What limitations does your model have (e.g., small sample size, class imbalance, etc.)?

This isn’t just about showing your work; it’s about helping others understand and trust your findings. In academic journal publishing, reproducibility is key. If another researcher can’t replicate your results using your write-up, that’s a problem (Drummond, 2009). Tables, charts, and confusion matrices can also go a long way in communicating your model’s performance. Visualizing your results makes them easier to digest and helps your readers (including your dissertation committee) grasp what your model is actually doing.

Own the Limitations

No model is perfect. Your job isn’t to pretend it is, it’s to show that you’ve thought critically about what your model can and can’t do. A good dissertation will discuss trade-offs, assumptions, and risks. For example, was there bias in the data? Were certain groups underrepresented? Could your model’s predictions be misused? Taking the time to reflect on these issues not only strengthens your credibility but also shows you’re engaging with your research ethically and thoughtfully (Mehrabi et al., 2021).

6. Collaborating with Data Scientists or Advisors

If you’re a graduate student, either seeking dissertation help or working on a dissertation and diving into predictive modeling for the first time, chances are you won’t be doing it entirely on your own. And honestly, you shouldn’t. Machine learning can get technical fast, especially when it comes to model selection, tuning, and interpretation. This is where collaboration comes in. Teaming up with a data scientist, statistician, or technically skilled advisor can be a game-changer. Not just for getting your model to work but for making sure your approach is rigorous, ethical, and appropriate for your research question. That said, the collaboration needs to be done right.

Know What You’re Asking For

The first step is being clear on what kind of help you need. Are you looking for a statistical consultant to help you clean and structure your dataset? Choose the right algorithm? Interpret the results? Knowing this upfront helps both you and your collaborator avoid confusion down the line. You don’t need to become an expert in Python or R overnight, but you do need to understand the logic behind the model you’re using. In an academic setting, especially during dissertation defense, you are responsible for every method in your study. Outsourcing the technical work to a data analytics company is fine as long as you can explain it clearly and confidently (Wicherts et al., 2016).

Speak the Same Language (Or Learn It)

One challenge when working with data scientists is the communication gap. They might be focused on optimizing the model’s performance, while you’re more concerned about theoretical alignment, variable justification, or making sure the methods meet academic standards. That’s a common tension but not an impossible one. Take the time to learn the basics of machine learning terminology. Know the difference between a training set and a test set. Understand what “feature importance” means. You don’t need to build a model from scratch, but the more fluent you are, the smoother the collaboration will be (Grolemund & Wickham, 2017).

It also helps to be clear about the expectations from your academic program. Some departments are more conservative about using machine learning, especially in fields like psychology or sociology. Your dissertation coach or committee might want to see clear ties to theoretical frameworks, something that predictive models don’t always emphasize. Bring those concerns into your conversations with technical collaborators early on.

Finding the Right People

If you don’t have someone in your department who can support you technically, look outside of it. Many universities have research computing centers, data science labs, or even campus-wide consulting services for graduate students. You might also find peers in engineering or computer science who are looking for applied projects to work on. When reaching out, frame your project in terms of a collaborative opportunity. Be respectful of their time, and be ready to contribute your domain knowledge in return. In many cases, this becomes a two-way learning experience: technical folks get to apply their skills to a real-world academic question, and you get guidance through the modeling process.

Keeping Academic Ownership

While collaboration can make your life easier, it’s still your dissertation. You’ll be the one presenting the work, answering questions, and defending the methodology. Make sure you’re involved in every step of the modeling process, even if you’re not the one writing the code. Take notes during meetings, ask for annotated scripts, and review every decision made. The goal is to co-produce knowledge, not just borrow someone else’s. According to the principles of research integrity, authorship and credit should reflect actual intellectual contribution, not just labor (Resnik & Shamoo, 2011).

Document the Collaboration

If you do work with someone, make sure to document how they helped. In some cases, you might include an acknowledgment in your dissertation. In others, especially if the work was extensive or the collaborator contributed conceptually, co-authorship on a future paper might be appropriate. Be transparent about these roles in your dissertation write-up, it shows professionalism and ethical awareness.

7. Conclusion

As machine learning continues to shape the future of research and industry, integrating predictive models into your dissertation isn’t just a smart move; it’s a strategic one. Whether you’re working in public health, education, psychology, or business, predictive analytics can help you explore patterns, test real-world scenarios, and present your findings with modern relevance. But doing it well takes more than just curiosity; it requires the right guidance, tools, and support.

This is where professional help becomes crucial. Working with a dissertation coach, tapping into dissertation assistance, or consulting experts can make the difference between a rushed, error-prone model and a thoughtful, credible study. For students unfamiliar with coding or machine learning workflows, the right dissertation consulting team can walk you through everything from dataset preparation to model validation while keeping academic integrity front and center.

If you’re unsure where to start with statistical analysis or need hands-on support with your model, connecting with a statistics consultant can bring clarity. Whether you need help analyzing your data, interpreting your model, or preparing for defense, we’ll make sure your work is methodologically sound and academically rigorous. And if you’re looking for technical firepower, we collaborate with leading data analytics companies, including specialists from data analytics firms, even the top data analytics companies in the USA. With Precision Consulting, you’ll get the best of both worlds: academic insight and technical precision. Don’t navigate this alone. Let us help your future-proof your research and confidently cross the finish line.

References

Bzdok, D., Altman, N., & Krzywinski, M. (2018). Statistics versus machine learning. Nature Methods, 15(4), 233–234. https://doi.org/10.1038/nmeth.4642

Dekker, G. W., Pechenizkiy, M., & Vleeshouwers, J. M. (2009). Predicting students drop out: A case study. International Working Group on Educational Data Mining (EDM).

Drummond, C. (2009). Replicability is not reproducibility: Nor is it good science. Proceedings of the Evaluation Methods for Machine Learning Workshop at the 26th ICML.

Hawkins, D. M. (2004). The problem of overfitting. Journal of Chemical Information and Computer Sciences, 44(1), 1–12. https://doi.org/10.1021/ci0342472

Hyndman, R. J., & Athanasopoulos, G. (2018). Forecasting: Principles and Practice (2nd ed.). OTexts. https://otexts.com/fpp2/

James, G., Witten, D., Hastie, T., & Tibshirani, R. (2013). An Introduction to Statistical Learning. Springer. https://doi.org/10.1007/978-1-4614-7138-7

Jordan, M. I., & Mitchell, T. M. (2015). Machine learning: Trends, perspectives, and prospects. Science, 349(6245), 255-260. https://doi.org/10.1126/science.aaa8415

Kaufman, S., Rosset, S., Perlich, C., & Stitelman, O. (2012). Leakage in data mining: Formulation, detection, and avoidance. ACM Transactions on Knowledge Discovery from Data, 6(4), 15. https://doi.org/10.1145/2382577.2382579

Kuhn, M., & Johnson, K. (2013). Applied Predictive Modeling. Springer. https://doi.org/10.1007/978-1-4614-6849-3

Kuhn, M., & Wickham, H. (2020). Tidymodels: A collection of packages for modeling and machine learning using tidyverse principles. https://www.tidymodels.org

Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., & Galstyan, A. (2021). A survey on bias and fairness in machine learning. ACM Computing Surveys (CSUR), 54(6), 1–35. https://doi.org/10.1145/3457607

Mellon, J., & Prosser, C. (2017). Twitter and Facebook are not representative of the general population: Political attitudes and demographics of British social media users. Research & Politics, 4(3), 1–9. https://doi.org/10.1177/2053168017720008

Nassif, A. B., Talib, M. A., Nasir, Q., & Khelifi, A. (2019). A survey on machine learning algorithms for predicting software quality. Journal of Computer Science, 15(4), 552–568. https://doi.org/10.3844/jcssp.2019.552.568

Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), 447–453. https://doi.org/10.1126/science.aax2342

Oreskovic, N. M., Huang, T. T., Moon, J., et al. (2019). Integrating predictive modeling and public health decision making: A case study of childhood obesity prevention. American Journal of Public Health, 109(S2), S131–S135. https://doi.org/10.2105/AJPH.2019.305001

Rahm, E., & Do, H. H. (2000). Data cleaning: Problems and current approaches. IEEE Data Engineering Bulletin, 23(4), 3–13.

Resnik, D. B., & Shamoo, A. E. (2011). The Singapore Statement on Research Integrity. Accountability in Research, 18(2), 71–75. https://doi.org/10.1080/08989621.2011.557296

Saito, T., & Rehmsmeier, M. (2015). The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE, 10(3), e0118432. https://doi.org/10.1371/journal.pone.0118432

Shmueli, G. (2010). To Explain or to Predict?. Statistical Science, 25(3), 289–310. https://doi.org/10.1214/10-STS330